I gave a presentation to a church group this week about social media. This was part informative briefing, for a group of people mainly of my own generation who don’t have my links into that technology world; part discussion of some of the benefits and challenges; and part a suggestion how people of faith might approach these issues.

At the heart of the talk were two case studies. First, something familiar to, and accepted by, most people there: buying a book online. A processs with several stages: browing the website; making the choice; setting up an account for the purchase; clicking “Buy”; and expecting the book in the mail.

Two or three issues, even here. First, online, look carefully at the browse results. For the book which I illustrated, the cheapest choice was actually an audio CD. Obvious, in a bookshop; not so obvious online. And possibly not what you would want.

Then, you have to provide personal and credit card details. A name and address: for shipping, and for verification. An email address: so the marketing emails probably start. And credit card details. You probably trust amazon.com (the example I used), but what about a company called Rogue Amoeba? I have, in fact, recently bought software online from them – something I’d been trialling for a while, so that’s how the trust was generated in this case. But trust is an issue. I’ve recently had a new card issued, because my card issuer detected fraudulent activity on the account.

And a third issue. Someone in the group had bought something through Amazon not realising it was actually being bought from a third party. When it didn’t arrive, Amazon don’t have liability. Watch who you are really buying from, is one lesson; but the other is that, on the web, it’s take it or leave it. You can’t negotiate terms and conditions. Yet, how many of us read them?

So: even a by-now common transaction had lessons for us. And there’s a fundamental principle here. We recognise privacy issues, and the sensitivity of our personal data, but: people trade privacy for benefit.

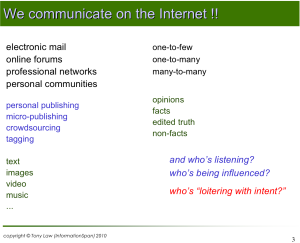

So we then looked at the world of social media: Facebook, Twitter, blogs, crowdsourcing. Human beings, as a species, communicate; and that’s how we use the Internet these days.

We communicate in a lot of ways, with different content. Sometimes – like with this blog – we intend a wide and unspecified audience and we write for public consumption. But not everyone realises that’s what they’re doing. Some people write in order to influence others: and that can be for good, or for not-so-good. And sometimes, people “loiter with intent”: that issue is, of course, most visible with concern about malign exploitation of children. It’s easy to pretend to be something you’re not.

The same principle applies: people trade privacy for benefit. In this case, it’s the benefit of keeping easily in touch with friends, with scattered families, with interest groups and so on. But in the online world it’s easier to make mistakes. The speed and scale makes them potentially more dangerous. And something once published here can’t be recalled; someone, somewhere, will have it cached.

And it’s easier for someone to pretend to be what they are not.

I illustrated the various media using the second case study: the Equality Trust. This campaigning group offers a website/blog; a Facebook presence; and tweets. There is also a very conventional platform: a book (“The Spirit Level”, which I commend).

I showed how the same content appears in different ways on the different platforms.  This is a positive example of the way they can be used, for an issue with which my audience could easily identify. But I also showed an example of the spam follower messages that arrive, which Twitter normally seems to catch pretty well: people are exploiting all of these platforms, and you must learn to recognise the clues (like: zero followers, only one tweet, and following lots of people).

This is a positive example of the way they can be used, for an issue with which my audience could easily identify. But I also showed an example of the spam follower messages that arrive, which Twitter normally seems to catch pretty well: people are exploiting all of these platforms, and you must learn to recognise the clues (like: zero followers, only one tweet, and following lots of people).

This, then, enabled us to discuss briefly the different styles of usage of these platforms. In my case, my Facebook account has few contacts who are mostly scattered family. I use LinkedIn for professional networking, and we discussed the differences briefly, but some people use Facebook this way. I tweet irregularly, usually to promote something I’ve written or an event, and for me again this is a professional platform though I may mix the occasional personal snippet.

Then we mentioned crowdsourcing. I looked at the Equality Trust on Wikipedia. We talked about the reliability of such sources, and also the ability for social media to react quickly: with the example of the Wikipedia entry about the London bombings, which was started, extended, structured and restructured while the mainstream media were still trying to phone in their stories. And with discussion about other topical areas (e.g. Iran, Burma or China where the authorities find information is increasingly hard to control).

We looked at the rise of commercial use of the web; and at the way Google changed the internet – not by its search engine, but by the advertising model of monetisation, which funds most “free” services which support, among other things, churches, charities and other interest groups.

Then it was time to consider what shapes people’s privacy attitudes. A short recent paper by Nov and Wattal is a good introduction to academic research in this area, for those who have access and aren’t put off by the language of formal socialogical hypothesis. This formalises what we may expect: people’s attitudes are shaped not just by their personal inclinations but by community and peer group attitudes (such as the more open culture generally among younger people, leading to more open sharing on Facebook); and by personal experience.

I added a personal perception: Society at large does not understand the concept of risk. One disaster outweighs many benefits in public opinion; but people also believe it “won’t happen to them”, and this may be particularly true among younger people with less life experience.

And Nov and Wattal reach one interesting conclusion: they don’t think that it’s necessarily possible to draw conclusions about social computing privacy issues by inference from more general privacy. The two should be studied separately.

Now the theology. In the late 1970s I was part of a church study group which published a report deriving a Christian approach to issues of developing technologies: energy, IT (we called it electronics in those days) and biotechnology. We were not trying to predict the technological future – we gave some examples, but recognised that things would come along which we had no concept of. In 1982, when the report published, the Web was still ten years away: we anticipated online shopping and information services, for example, but we attributed them to the Prestel service.

Fundamentally we asserted that technologies themselves are neutral: it is the uses to which they are put (by human beings) that create the possibility of good or evil. But we suggested six questions for people of faith to ask of any developing technology.

- Is [a technology implementation] likely to change society in a direction more compatible with God’s ultimate purpose?

- Does it give people opportunity for growth, or diminish [their] stature as a child of, and fellow-worker with, God?

- Does it benefit only one section of the world community at the expense of some other group?

- Does [it] increase the possibility of one individual or group … being able to dominate another?

- Does it add to the real quality of life or detract from it?

• and for whom?

• is this the same as “standard of living”? - Does it make proper use of world resources, taking into account the needs of the whole world and of future generations?

We had a lively discussion, including issues such as

- whether – or how – churches and other pro bono groups can/should use social media. There are already many online groups; online worship services and prayer lines; and, at this time of year, online Lenten studies and virtual pilgrimages

- how these media will play in the forthcoming UK General Election: President Obama’s election campaign capitalised on these significantly, and UK political parties are already there

- data retention by service providers: how long Google keeps your mail, even when you’ve “deleted” it (and service users expect backup, of course). Data mining: mobile phone companies can put together group profiles based on their records of where your phone’s been (are you a commuter, or a clubber?) and use this to make their service better fit your needs, but also market more to you.

A great evening and good discussion. These are ideas I want to go on developing; it was good to have a first chance to try them out.

Links and references:

•nbsp;Nov, O., & Wattal, S., Social Computing Privacy Concerns: Antecedents and Effects. Proc. 27th International Conference on Human Factors in Computing Systems (CHI 2009), Boston. ACM, New York, 2009, 333-336

•nbsp;Boyes, A.E. (ed). Shaping Tomorrow. Methodist Church Home Mission Division, 1982 (not available online)

•nbsp;The Equality Trust (link from here to blogs, tweets, etc)

•nbsp;Online church: St Pixels (initially sponsored by the Methodist Church)